Your Bite-Sized Updates!

Stay ahead in the fast-moving world of technology with quick, easy-to-digest updates. From the latest gadgets and AI breakthroughs to cybersecurity alerts and software trends, we bring you the most important tech news. Just the key insights you need to stay informed.

Google I/O 2025 Highlights: AI Takes Center Stage

At its annual Google I/O 2025 developer conference, Google put artificial intelligence front and center, unveiling major updates that integrate AI more deeply across its core products — including Search, Gmail, and Chrome.

The company introduced improved AI models with enhanced capabilities in image generation, code writing, and task automation. These advancements mark a significant step toward more intelligent and responsive user experiences across Google’s ecosystem.

Looking to the future, Google also teased bold new initiatives: revamping video calls, creating a smarter and more conversational digital assistant, and collaborating with traditional eyewear brands to develop next-generation smart glasses.

While Android didn’t take the spotlight during the main keynote, Google had already revealed updates earlier in the week, including a fresh redesign of its mobile OS and enhancements to its device tracking system.

For a full breakdown of all announcements from Google I/O 2025, read on below.

‘https://www.theverge.com/google/670224/google-io-2025-live-blog-gemini

Improvements in ‘reasoning’ AI models may slow down soon, analysis finds

A new analysis by Epoch AI, a nonprofit research institute, suggests that the AI industry’s ability to achieve massive performance gains from reasoning AI models may be nearing its limits, with progress potentially slowing within a year.

Reasoning models like OpenAI’s o3 have demonstrated significant improvements on AI benchmarks, particularly in mathematics and programming tasks. These models apply more computing power to problems, improving performance but requiring longer processing times compared to conventional models.

The development process involves first training a conventional model on vast amounts of data, then applying reinforcement learning techniques that provide feedback on solutions to complex problems.

OpenAI has dramatically increased computing power for training, using approximately 10 times more resources for o3 compared to its predecessor o1, with most of this increase dedicated to reinforcement learning. The company plans to prioritize reinforcement learning further, allocating even more computing power than used for initial model training.

However, Epoch AI identifies an upper bound for computing resources that can be effectively applied to reinforcement learning. According to analyst Josh You, while standard AI model training performance gains quadruple annually, reinforcement learning gains grow tenfold every 3-5 months.

He predicts that reasoning training progress will “probably converge with the overall frontier by 2026.”

The analysis highlights a potential slowdown in one of AI’s most promising development areas, suggesting the industry may need to explore alternative approaches to maintain rapid progress in AI capabilities.

‘https://techcrunch.com/2025/05/12/improvements-in-reasoning-ai-models-may-slow-down-soon-analysis-finds/

Anthropic, Google Score Win By Nabbing Openai-Backed Harvey as a user

Harvey, a popular legal AI tool and one of OpenAI Startup Fund’s most successful portfolio companies, announced it will now use foundation models from Anthropic and Google in addition to OpenAI’s models.

Anthropic, Google Score Win By Nabbing Openai-Backed Harvey As A User

This represents a significant win for OpenAI’s competitors, as Harvey is valued at $3 billion following a $300 million Series D led by Sequoia. Notably, Google’s GV led Harvey’s $100 million Series C round in July 2024.

Harvey’s decision came after internal testing with their BigLaw benchmark showed various foundation models excelling at different legal tasks. For example, Google’s Gemini 2.5 Pro excels at legal drafting but struggles with pre-trial tasks, while OpenAI’s o3 performs well on pre-trial work.

Rather than training its own models, Harvey will fine-tune high-performing reasoning models from multiple vendors for the legal market. The company will also publish a public leaderboard ranking model performance on legal tasks, increasing competitive pressure among AI providers.

‘https://techcrunch.com/2025/05/13/anthropic-google-score-win-by-nabbing-openai-backed-harvey-as-a-user/

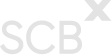

AI Benchmarks Are Broken—Here’s How Researchers Want to Fix Them

SWE-Bench, a benchmark released in November 2024 to evaluate AI coding abilities using real-world GitHub issues in Python, quickly became a popular standard among major AI players like OpenAI, Anthropic, and Google. However, its popularity has revealed deeper issues in how the AI industry evaluates models. Many developers are “gaming” the benchmark by tailoring models specifically to pass it, rather than improving general coding ability. As a result, some high-scoring models fail when applied to tasks in other programming languages.

This problem reflects a broader “evaluation crisis” in AI, where industry benchmarks often lack validity—they don’t truly measure what they claim to. While benchmarks like ImageNet once aligned closely with real-world applications, newer benchmarks such as MMLU and WebArena are criticized for vague goals, poor external validity, and even manipulation.

To address this, researchers are pushing for a shift towards validity-focused evaluation, drawing from social science methodologies. They emphasize defining clear capabilities, breaking them into measurable subskills, and linking benchmarks to real-world tasks. New initiatives like BetterBench and efforts from organizations like Hugging Face and EleutherAI aim to create better, more transparent benchmarks.

Despite these efforts, AI companies continue relying on flawed but familiar benchmarks to demonstrate general intelligence. Many experts believe reform is necessary but difficult, especially as the race for AGI (Artificial General Intelligence) continues to drive the field forward at breakneck speed.

‘https://www.technologyreview.com/2025/05/08/1116192/how-to-build-a-better-ai-benchmark/

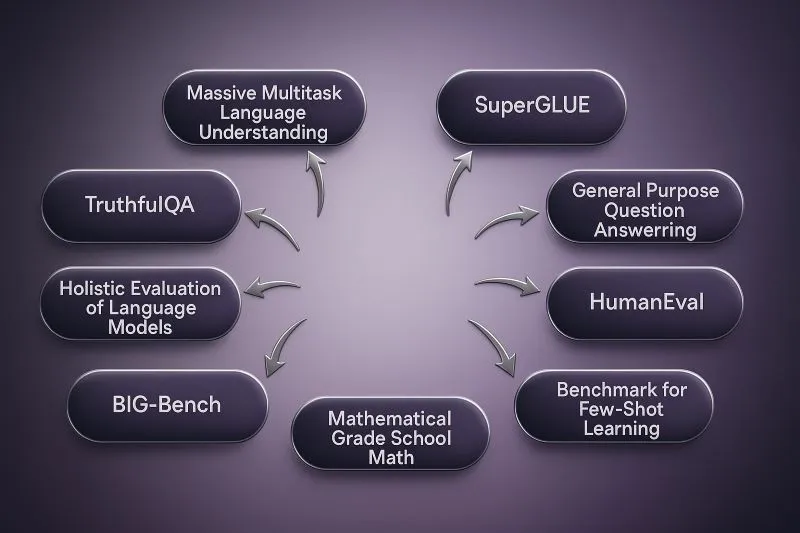

MIT engineers advance toward a fault-tolerant quantum computer

MIT researchers achieved the strongest nonlinear light-matter coupling ever demonstrated in a quantum system, bringing quantum computers closer to fault tolerance and practical applications.

Using a novel superconducting circuit architecture, the team showed coupling about 10 times stronger than previous demonstrations, potentially enabling quantum processors to run 10 times faster than current systems.

The breakthrough addresses a critical bottleneck in quantum computing: the readout process that measures quantum states. Faster readout is essential because quantum computers need to measure results and perform error corrections before compounding errors reduce accuracy and reliability.

Lead researcher Yufeng “Bright” Ye noted this advancement could “eliminate one of the bottlenecks in quantum computing” and accelerate progress toward fault-tolerant quantum computers capable of real-world applications including rapid material simulation and faster machine-learning models.

While significant work remains before practical implementation, demonstrating the fundamental physics represents a major step toward quantum operations achievable in nanoseconds. The research was published in Nature Communications.

‘https://news.mit.edu/2025/mit-engineers-advance-toward-fault-tolerant-quantum-computer-0430

Encryption provider finds shortcomings in adoption of post-quantum cryptography solutions

Encryption provider DigiCert reports organizations are unprepared for quantum computing threats despite ongoing U.S. government efforts to implement post-quantum cryptography (PQC) on federal systems.

A survey of over 1,000 cybersecurity managers across the US, UK, and Australia revealed significant gaps between awareness and implementation:

- 69% of organizations recognize quantum risks to current encryption

- Only 5% have implemented quantum-safe encryption

- 46.4% report substantial portions of their encrypted data could be compromised

Approximately 30% of respondents estimate it will take 1-2 years to adopt PQC across their organizations, while 33% estimate 3-5 years. This timeline aligns dangerously close to when many expect quantum computers capable of breaking current encryption to emerge: 34.7% predict this within two years, and 34.5% within 3-5 years.

The Biden administration originally set a 2035 deadline for federal agencies to implement PQC but accelerated this timeline in a January 16 executive order calling for implementation “as soon as practicable.”

Nearly half of surveyed organizations believe 10-25% of their data would be vulnerable to “harvest now, decrypt later” attacks, where threat actors steal encrypted data to decode after quantum computers become available.

‘https://insidecybersecurity.com/daily-news/encryption-provider-finds-shortcomings-adoption-post-quantum-cryptography-solutions

Xi Jinping Calls for AI Self-Reliance Amid U.S.-China Tech Race

Chinese President Xi Jinping has called for greater “self-reliance and self-strengthening” in AI development, emphasizing the need to build an independent and controllable AI ecosystem. Speaking at a Politburo study session, Xi urged China to focus on core technologies like high-end chips and foundational software, leveraging the country’s centralized national system.

He also stressed the urgency of establishing AI regulations, risk warning systems, and emergency response mechanisms to ensure AI safety and reliability.

The comments come as China narrows the AI gap with the U.S., highlighted by Chinese startup DeepSeek’s recent launch of a cost-efficient AI reasoning model using less advanced chips.

Xi reiterated his stance that AI should not be a “game for the rich,” and called for more international cooperation in AI governance.

‘https://www.reuters.com/world/china/chinas-xi-calls-self-sufficiency-ai-development-amid-us-rivalry-2025-04-26/